Author: Steven Palayew

Published: 17 Feb 2023

Description: Introducing our notification filtering model

ML Model Update 1 (Feb 13-17)

A Quick Reminder

Potentially the most unique but complex software feature of our smart ring is intelligent notification filtering. This uses machine learning to categorize incoming notifications as urgent, or non-urgent. Activating an LED if an urgent message is received to discreetly inform the user that they should check their phone. Previous work has been done on notification filtering, for example by Meta , but the goal of this work was to filter notifications to only present those that would be most distracting to the user in order to maximize engagement. For example, content from their favorite influencer. On the other hand, the goal of our intelligent notification filtering feature is to only notify users of notifications that there would be tangible negative consequences to them not seeing quickly.

First Model

Previously, our model (MobileBERT trained on 119 text messages I generated manually) was able to achieve a modest but respectable test accuracy of 78.95%, and train accuracy of 85.42%. However, observing this gap along with the change in train and test accuracy with subsequent epochs, it was clear that the model was quickly overfitting on the training data. This was not overly surprising as our training set was very small, and therefore to rectify this issue I decided to generate more training data.

After manually generating 81 more datapoints, I quickly realized that developing a large and comprehensive training set would be extremely time intensive, especially with the rest of the team being focused on tackling challenging hardware problems. I therefore decided to try generating synthetic data.

Second Model

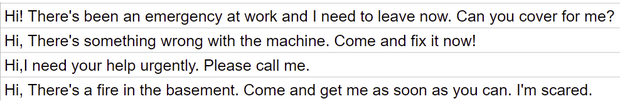

OpenAI’s GPT3 was used for this. Specifically, the Curie model was, and was notably selected over the typically more performant Davinci model as Davinci would generate text messages that were too obviously urgent. While the messages Curie generated did sometimes have this issue, it was not as prevalent. Some example urgent synthetic text messages are shown below:

Synthetic training data samples

Synthetic training data samples

A total of 1800 synthetic messages were generated (900 urgent, 900 non-urgent). Two methods that were considered in terms of using this synthetic data were, training a model with the synthetic data then further fine tuning with real data, or simply training with mixed data. The latter is much easier to implement from an engineering perspective, and because of this and recent research indicating there may not be a significant performance difference between these two strategies, it was decided to simply used this mixed data strategy. Specifically, the 1800 synthetic messages were added to the 200 real messages previously in the training set to produce a larger, 2000 message train dataset.

The new model trained on this data achieved a test accuracy of 90.9% and a train accuracy of 99.8%; a big improvement! Note that an expanded test set with 22 examples was used to be more thorough, however the original 19 test examples were still in this set and our model still got 17/19 or 89.5% of these messages correct.

What’s Next

Stay tuned for my next blog post where we will take a deeper dive into the performance of our model, and see if there are any improvements that need to be made!